2009/11/14

ITcookbook

2008/01/28

In Defense of UAC

This is me, editorializing - not an activity I prefer to use this blog for. But occasionally something seems so nonsensical to me that I just have to speak up. And today it's UAC that has me speaking up.

This is me, editorializing - not an activity I prefer to use this blog for. But occasionally something seems so nonsensical to me that I just have to speak up. And today it's UAC that has me speaking up.I'm greatly disappointed by the number of true techies and semi-techies who have gone into a sort of 'hate UAC' mode. I understand their frustrations; UAC can slow down routine sysadmin activities and break into the flow of your day. But, like seatbelts in a car, it's one of those things that seems like a terrible imposition at first, then just blends into your routine to the point where you hardly think about it. You buckle up and you're safer because you did it. I was one of those guys who hated seatbelt laws when they first appeared. But I got used to it, and now that I've survived a rollover accident because of a seatbelt, I'm pretty glad I did!

I've been running Windows systems according to the least privilege principle ever since Windows NT 4. I call this nonadmin because it's less of a mouthful. And I've been forcing users to do it, and begging my co-administrators to do it, since 1996. But I can't lie: nonadmin was a huge pain in the NT4/W2000/XP/W2003 days; the RunAs utility was a major PITA. You had to use special workarounds to elevate essential Windows tools like the file Explorer. There were a great many programs which didn't work well (or at all!) in a nonadmin context. Running nonadmin was doable ... but only with great self discipline, and a fair amount of pain as you worked through the changes in habits and gained the knowledge required to get it done.

In a corporate setting, it is possible for the administrator to do all the work and leave the regular office users blissfully unaware of the fact that they are nonadmin. But when you're the administrator - as home users and many smallbiz users are - the time and effort required to go nonadmin are just more than most people are prepared to expend. So they ignored the issue. And it was easy enough to do; most folks were completely unaware that MS had already made them administrators during the OS install!

There's another group of power users who were also blissfully unaware of their admin status: application coders and testers. Because they didn't know (or did not care) that they were administrators, it was very easy to write programs in ways that required admin capabilities to run properly. And this perpetuated the problem. It became a catch-22: users were admins because devs were. And devs were admins because users were.

Microsoft needed to break out of this loop, and they knew it. And UAC was born. It's actually an interesting compromise: because of all those legacy applications which require admin privs, MS couldn't simply force all users to a full nonadmin mode, which would have been technically preferable. So UAC strikes a middle ground: you're still an adminsitrator by default. But ... not really.

Márton Anka explains this well, so I will simply summarize: all accounts you create during Vista's setup are still members of the Administrators group. But when these accounts log in, all normal operations are carried out with a Limited User's access level. However, when Vista sees you do something that requires admin privs, it presents the UAC dialog box before it elevates privs for that process only. Unless you are running in the account named Administrator, in which case you never see the UAC prompt. Also note that users created after Vista setup do default to a Limited User status; they can elevate privs via UAC but must type a username and password to do so.

So why do I defend all this, while so many other techies are railing against it? Well, remember my saying how hard it was to run nonadmin with just the RunAs tool? UAC now makes all those difficulties a thing of the past.

I run as a Limited User, so every UAC prompt means I need to enter the name of my admin user, and its password. And I saw quite a lot of UAC while installing Vista, and in the two weeks afterwards. I did get a little sick of it, to be honest. But I kept reminding myself of the pain of RunAs, and I soldiered on. Finally, after setting up all my apps, and making various little tweaks and twiddles here and there (as we all tend to do), and exploring the various new administrative features of Vista ... I noticed that I was hardly seeing the UAC prompt at all.

Fast forward to the present. Though I still keep an XP system handy, Vista is the OS I spend my day in. From here I do of course RDP/VNC/SSH to other systems. As a sysadmin of course I'm always poking my nose into things. But honestly, I think I've seen less than 5 UAC prompts in the last 7 days - and I've installed one new app and upgraded another in that time.

So all that RunAs futzing is gone now - I only need to use RunAs for the rare occasions when I need to have an administrator-level instance of cmd. Instead of me having to remember to elevate my privs, Vista does the remembering for me. And whenever I see the UAC prompt, I pause for a second and ask myself whether I expected this. If I didn't expect it, I can ask the other relevant questions, like:

- Do I recognize the program that wants elevated privs?

- Do I trust it?

- Do I have a reasonable understanding of why it needs elevated privs? (Do I know what it will do with them?)

- Does the action really need to have full unfettered access to my system and data?

If I can't give myself satisfactory answers to these questions, I can just say no. And that's the end of it, clean and simple.

UAC is also providing a lot of back-pressure on program developers and testers to organize their programs and associated data into non-critical areas of the system, and this is a good thing. It means that new programs will be more stable and less risky to run, because developers themselves will not want to be hit with constant UAC dialogs. In the long run, this may be the largest benefit UAC provides.

In summary - UAC makes it easier to run nonadmin, and that's a good thing. It provides incentive for developers to write better code, and that's an excellent thing. I'm a big fan of UAC, and I hope the naysayers will see the light. Just as the seatbelt-haters did.

2008/01/19

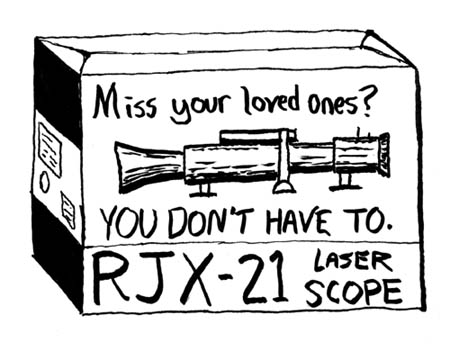

Miss me?

Contritely, I have to admit that I just haven't been blogging a lot at all lately. Somehow I got to thinking of my blog entries as things which should require doing the research of getting all the facts exactly right, taking screenshots, and coming up with exactly the right wording - well .. it is a time sink. And my time has been going other places lately.

Contritely, I have to admit that I just haven't been blogging a lot at all lately. Somehow I got to thinking of my blog entries as things which should require doing the research of getting all the facts exactly right, taking screenshots, and coming up with exactly the right wording - well .. it is a time sink. And my time has been going other places lately.But 2008 has rolled around, and I do still have stuff to share. Also, I've discovered this so-called tumbleblog phenomenon. Like 'blogosphere', I'm not sure I like the word, but I do like the concept, which is basically: less organization, more quick hits. So I proudly present my very own tumbleblog: . It is mostly just a collection of links and pithy observations I make on the fly as I go about my techie day.

I should also come clean to the many, err, 'almost adminfoo ready' bits 'n pieces that I have stored away on my wiki at http://quux.wiki.zoho.com/. So, I have been writing; it just hasn't been making it to this here blog. My apologies for not pointing this out sooner.

Also, I'm taking steps which may free up the time to do more proper adminfoo blogging. No promises yet, but let's all keep our fingers crossed!

2007/04/04

Windows Perfmon: The Top Ten Counters

To open PerfMon, just go to the Start Menu, choose Run and type perfmon.

Bottleneck analysis

The most common use of PerfMon is to answer the burning question: why is my system running slow?

With the five performance counters listed below, you can quickly get an overall impression of how healthy a system is - and where the problems are, if they exist. The idea here is to pick counters that will be at low or zero values when the system is healthy, and at high values when something is overloaded. A 'perfectly healthy' system would show all counters flatlined at zero. (Perfection is unattainable, so you'll probably never see all of these counters flatlined at zero in real life. The CPU will almost always have a few items in queue.)

- Processor utilization

-

- System\Processor Queue Length - number of threads queued and waiting for time on the CPU. Divide this by the number of CPUs in the system. If the answer is less than 10, the system is most likely running well.

- Memory utilization

-

- Memory\Pages Input/Sec - The best indicator of whether you are memory-bound, this counter shows the rate at which pages are read from disk to resolve hard page faults. In other words, the number of times the system was forced to retreive something from disk that should have been in RAM. Occasional spikes are fine, but this should generally flatline at zero.

- Disk Utilization

-

- PhysicalDisk\Current Disk Queue Length\driveletter - this is probably the single most valuable counter to watch. It shows how many read or write requests are waiting to execute to the disk. For single disks, it should idle at 2-3 or lower, with occasional spikes being okay. For RAID arrays, divide by the number of active spindles in the array; again try for 2-3 or lower. Because a shortage of RAM will tend to beat on the disk, look closely at the Memory\Pages Input/Sec counter if disk queue lengths are high.

- Network Utilization

-

- Network Interface\Output Queue Length\nic name - is the number of packets in queue waiting to be sent. If there is a sustained average of more than two packets in queue, you should be looking to resolve a network bottleneck.

- Network Interface\Packets Received Errors\nic name - packet errors that kept the TCP/IP stack from delivering packets to higher layers. This value should stay low.

Pay close attention to the scale column! Perfmon attempts to automatically pick a scale that will magnify or reduce the counter enough to produce a meaningful line on the graph ... but it doesn't always get it right. As an example, Perfmon often chooses to multiply Disk Queue Length by 100. So, you might think the disk queue length is sustained at 10 (bad!) when in fact it's really at 1 (good). If you're not sure, highlight the counter in the lower pane, and watch the Last and Average values just below the graph. In the screenshot below, I modified all of the counters to a scale value of 1.0, then changed the graph's vertical axis to go from 0-10.

To change graph properties (like scale and vertical axis as discussed above), rightclick the graph and choose Properties. There are a number of things to customize here ... fiddle with it until you have a graph that looks good to you.

To get a more detailed explanation of any counter, rightclick anywhere in the perfmon graph and choose Add Counters. Select the counter and object that you are curious about, and click the Explain button.

This screenshot shows a very lightly-loaded XP system, with the Memory\Pages Input/Sec counter highlighted:

All we see here is the Proccessor Queue Length hovering between 1 and 4, and two short spikes of Pages Input/Sec. All other counters are flatlined at zero, which is easy to check by highlighting each of them and watching the values bar underneath the graph. This is a happy system - no problems here!

But if we saw any of the above counters averaging more than 2-4 for long periods of time (except Processor Queue Length: don't worry unless it's above 10 for long lengths of time), we'd be able to conclude that there was a problem with that subsystem. We could then drill down using more detailed counters to see exactly what was causing that subsystem to be overloaded. More detailed analysis is beyond the scope of this article, but if there's enough interest I could do a second article on that. Leave a comment if you're interested!

General activity counters

Well, the system is healthy - and that's good ... but how hard is it working? Is the processor workin' hard, or hardly workin'? How much RAM is in use, how many bytes are being written to or read from the disk or network? The following counters are a good overview of general activity of the system.

- Processor utilization

-

- Processor\% Processor Time\_Total - just a handy idea of how 'loaded' the CPU is at any given time. Don't confuse 100% processor utilization with a slow system though - processor queue length, mentioned above, is much better at determining this.

- Memory utilization

-

- Process\Working Set\_Total (or per specific process) - this basically shows how much memory is in the working set, or currently allocated RAM.

- Memory\Available MBytes - amount of free RAM available to be used by new processes.

- Disk Utilization

-

- PhysicalDisk\Bytes/sec\_Total (or per process) - shows the number of bytes per second being written to or read from the disk.

- Network Utilization

-

- Network Interface\Bytes Total/Sec\nic name - Measures the number of bytes sent or received.

And ... that's all for now. Hopefully this quick show-and-tell has given you enough information to use PerfMon more usefully in the future!

2007/03/29

OS Vulnerabilities Compared

- Install the OS as default-ly as possible. Scan it with nmap and Nessus during the installation.

- At completion of installation, scan again.

- Install relatively common listening services and scan again.

- Install the latest ‘major patch’, and scan again.

- Finally install all ‘minor patches’ published prior to Jan 1 2007, and scan again.

I very much encourage you to read the full report, but one thing I sorely missed was a summary chart so I could get a better sense of what all that verbiage really means. So I created one – you see it below.

Some important points about this summary chart:

- I left out the ‘mid-install’ scan info. I’m assuming y’all have the sense not to build your critical machines whilst connected to attack-prone networks.

- The study mentions local vulnerabilities in one or two places, but is primarily concerned with remote vulns. In the ‘vulns’ column I list only those remote-exploitable vulns found by Nessus.

- I’m not 100% sure I have the numbers exactly right. In some places the report was confusingly worded. I think I have preserved the author’s intent and I really hope he’ll let me know if I fumbled the ball.

- I list port names for a reason. It seemed to me that in at least some cases, the choice of services to install in the ‘services installed’ config was a bit arbitrary. I note that some server OS have a web server enabled, some do not. So I thought this was important to include!

- ICMP is not counted as one of the open ports.

- As best I can tell, no firewall is enabled in any of the tests. In some cases, default firewalls were explicitly shut off.

There’s a lot to be learned here. For now, I’m drawing no conclusions. But I welcome yours, in the comments!

I'm sorry the above image is so small - click it to see at readable size. I tried to use an actual html table, so you'd be able to cut/paste from it, but I'm learning that Blogger likes to mangle html in its own special ways, so for now we'll have to make do with this image.

2007/03/26

The SHTF Plan

- First confirm your fears. You don't want to be the boy who cried wolf; you need to have a firm basis for your theory that someone has hacked (or is hacking) your network. At this stage, don't worry about mitigation. Just be sure you have enough evidence to form a solid basis for your theory that maliscious activity is going on.

- Document everything. Start a timestamped log (textfile or handwritten notes) and gather your evidence nondestructively. Throughout the following steps, keeping a timestamped log of who was doing what is going to be important for many reasons. When it’s all done, you’ll need a record of what was damaged, what was changed, what was offline and for how long. The records will also be valuable in possible legal actions, and for planning for your next incident.

- Assemble the team. By which I really mean - get your boss on the horn. At this point you want only the boss - don't involve coworkers. Let boss know what's going on, and what your evidence is. Emanate calmness and rationality here! No one is helped by a sense of fear or excitement or, dare I say it, panic. Suggest that the boss may want to involve legal, public relations, HR, line of business stakeholders, other IT workers - but let the boss decide and make those calls. Let him know that you're going to take no action other than diagnostic and getting your notes in order while he's assembling the team.

- Make preliminary decisions. Now that the team is together, you’re going to need to present the information you’ve gathered so far. Next your team have a couple of relatively simple decisions to make:

- Who has ultimate authority? This is not the time to have everyone off playing hero in an uncoordinated response. You need a ‘fire chief’ – and the chief needs to know what everyone is doing.

- Priorities. Which is more important – fixing damages, closing the hole, preserving evidence so you can prosecute the attacker? You’re aiming to get a ranked priority list here. A bit of advice: do what you can to make sure that blame is de-prioritized. Let all members of the team know that this is most definitely not the time to even think about finger pointing!

- Who will work on what?

- How often should the response team get together to assess status and make decisions?

- Communication plan. IT workers will want to be able to concentrate – their effectiveness is vastly reduced if they have to answer a ringing phone every five minutes. Work out a quick plan to avoid that, and put one person in charge of disseminating information to whomever else needs it. Let him be the guy whose phone rings every 5 minutes.

- Work plan. Whomever is in charge will need to marshal forces carefully. In an event like this, people tend to overwork themselves – that can lead to mistakes, or a skeleton crew available tomorrow when the next problem happens. Be sure to rotate people effectively.

- Assess damage (and damage potential). Now, with the team assembled and preliminary decisions made, it’s time to reconsider the damage done and the potential for more damage. Your team may need to do more investigation at this point, or it may have enough information to procede to the next step.

- Stop the spread. Consider whether you need to shut down servers, services, network links, etc. Maybe a firewall rule change is enough. Think about how to make the smallest (and least destructive) changes that have the greatest impact on slowing the growth of the problem.

- Notification plan. You’re probably going to have to tell your users about an outage. But what about customers, business partners, law enforcement? Consider carefully the phrasing and timing of such notifications. This is a management task.

- Remediate. OK, now that you understand the damage, and you’ve stopped the spread, it’s time to consider the cleanup plan. This is going to depend much on some of the decisions made at step #4 – do you need to preserve evidence for later legal or disciplinary action? Which systems need to be cleaned, secured, and brought back online first? What can wait?

- Post-Mortem. The post mortem is often skipped, as exhausted people all take their much-deserved rest, and then return to all those tasks they deferred whilst working on the outage. But impress on your boss the need for spending a couple of hours critiquing the incident, running down loose ends, and so on. It’ll be worth it – though you may never be able to measure that worth. Because you’ll never know about the problems your post-mortem successfully prevents!

Even if you can't get the post-mortem going, this is the best time to hit up your boss for funding the protective measures you wish you'd had before. Could be a new backup system, better network partitioning via VLANs - whatever. The iron is hottest about a week after the event. Strike it!

To me, most of the above seems like just plain common sense. But, having been through more than a few SHTF scenarios, I am always amazed by the number of  people who, in their zeal to fix the problem, take leave of common sense, or do something which looks sensible from their POV but proves wasteful or even counterproductive when you step back and take the wide angle view.

people who, in their zeal to fix the problem, take leave of common sense, or do something which looks sensible from their POV but proves wasteful or even counterproductive when you step back and take the wide angle view.

2007/03/23

MTBF: Not What You Thought

It has recently come to my attention that most of us in the trade have basically the wrong idea about MTBF. You know the term, right? Mean Time Between Failures - which is a figure we often look at; especially when we choose disks for our arrays. The problem? In the words of the great Inigo Montoya:

It has recently come to my attention that most of us in the trade have basically the wrong idea about MTBF. You know the term, right? Mean Time Between Failures - which is a figure we often look at; especially when we choose disks for our arrays. The problem? In the words of the great Inigo Montoya:You keep using that word. I do not think it means what you think it means.

A little reading over at Wikipedia will soon dispell you of the idea that when a disk's spec-sheet says the MTBF is 50 years, that disk will actually last 50 years. Oh, hmm, you say you took a look at that Wikipedia page, and the math scared you? Yeah - me too. So let's ask Richard Elling (Sun.com) to cut through the fog with a real-world example:

MTBF is a summary metric, which hides many important details. For example, data collected for the years 1996-1998 in the US showed that the annual death rate for children aged 5-14 was 20.8 per 100,000 resident population. This shows an average failure rate of 0.0208% per year. Thus, the MTBF for children aged 5-14 in the US is approximately 4,807 years. Clearly, no human child could be expected to live 5,000 years. Similarly, if a vendor says that the disk MTBF is 1 Million hours (114 years), you cannot expect a disk to last that long.

Oh. Well, dang. I guess that disk ain't very likely to last 50 years!

My rule of thumb? Check the warrantee coverage. The manufacturer with the longest warrantee at the lowest price is the one most confident in the durability of their drives.

2007/03/19

Adminfoo is baaaaaack!

I have to admit a bit of embarrassment here. I've always preached the Gospel of Backups, and so it's bitterly ironic that I didn't have a backup of the old adminfoo site. Now, this really is something I checked into before signing up with my former host - and they assured me they kept backups in three separate places. So I let that lull me into a false sense of confidence. I should have been keeping my own backups - and I can assure you I will be doing so from here on out. They'll be a simple wget dump of the site - but at least they'll exist.

If you happen to be one of the adminfoo readers who has found your way back to the site after this long hiatus: welcome back! I can't help but feel that I owe you two apologies: one for having dropped out of site for so long, and another for breaking the links to any articles you might have bookmarked. Sorry about that. Really, really sorry about that!

And so, here we are again. Back from the grave, and now hosted on . I did spend a lot of time looking into other host providers, other platforms, and so on ... and I finally decided to just let Google (who owns Blogger) do all the work for me. Sure I lose a couple of features over the excellent Drupal platform I was using before, but this is gonna save me a lot of work. No longer will I worry about my host provider being down, or how to implement a new anti-spam system, or the overhead of a lot of features that I really didn't need. It has always been my intent to write often and insightfully about the craft of systems administration, and I now realize that I was putting far too much effort into presentation, and not enough into the writing.

Ah, yes, the writing. Over the years my propensity for writing has ebbed and flowed on a schedule all its own. But the dry spells always end, and right now I feel a new wellspring of creativity a-blooming (pardon the mixed metaphor). Coming up, I plan to continue in the old vein, with commentary on strategy, tactics, and tools. I'll also bring back much of the old content, as I've found it's not that hard to just cut-and-paste from my old data to this new copy of adminfoo. It's time-consuming, though, and I have to do some manual reformatting. So I'm bringing back the oldest posts first, and I'll be slowly working through all the old content.

- The 'So You Inherited A Network' series I planned out over a year ago, and never got around to writing.

- Map of systems administration knowledge. This will be on wiki.adminfoo.net, which is right now just a twinkle in my eye. The SAKmap is not my idea, actually; it belongs to another fellow whom I hope to convince to write here as well. I'm not sure he's aware of that yet, so I'll keep his name to myself for the time being.

- OS patch reports. I admin a few Windows servers, and a few Linux ones too. While it might seem boring, I think a lot of people will benefit from seeing a list of patches applied to running systems - and knowing that in at least one case, those patches did or did not blow up the systems. Where I run into troubles or gotchas, I'll let you know - but I expect (and hope!) that most of those entries will be boring, predictable: applied patches foo, bar, and baz. No problems!

- A few other ideas, which I'm keeping to myself right now. Mainly because, well, I dunno if I'll ever get around to, er, doing them.

2004/10/28

Don't cache 'negative' DNS lookups on Windows systems

- You try to connect to somesystem.yourdomain.com and fail - the name cannot be looked up.

- You discover that the DNS record is missing in your DNS server, and you fix it by adding the correct record.

- ... but you still can't connect to somesystem.yourdomain.com from your workstation!

What's happening here is that your system has cached a 'negative lookup'. Your local DNS cache basically doesn't think the DNS name exists - and it will go on thinking that until the cached entry expires.

Here is an example:

C:\Tools>ipconfig /displaydns

Windows IP Configuration

1.0.0.127.in-addr.arpa

----------------------------------------

Record Name . . . . . : 1.0.0.127.in-addr.arpa.

Record Type . . . . . : 12

Time To Live . . . . : 0

Data Length . . . . . : 4

Section . . . . . . . : Answer

PTR Record . . . . . : localhost

nosuchmachine.cojones.org

----------------------------------------

Name does not exist.

adminfoo.net

----------------------------------------

Record Name . . . . . : adminfoo.net

Record Type . . . . . : 1

Time To Live . . . . : 308

Data Length . . . . . : 4

Section . . . . . . . : Answer

A (Host) Record . . . : 67.15.36.7

localhost

----------------------------------------

Record Name . . . . . : localhost

Record Type . . . . . : 1

Time To Live . . . . : 0

Data Length . . . . . : 4

Section . . . . . . . : Answer

A (Host) Record . . . : 127.0.0.1

Here we see that the machine nosuchmachine.cojones.org was looked up, and found to be nonexistent. Now, even if I go and create a DNS record for nosuchmachine, my host will not resolve that name until the 'negative result' entry is flushed from my cache. I can manually flush it with an ipconfig /flushdns command.

Or I could put the following registry entries into my system:

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Dnscache\Parameters]

"NegativeCacheTime"=dword:00000000

"NetFailureCacheTime"=dword:00000000

"NegativeSOACacheTime"=dword:00000000

Essentially this will tell my system to never cache 'negative lookups'.

2004/10/27

Find rogue DHCP servers!

Basically this commandline tool can run on a Windows host and send a DHCP request, then report all servers which answer. It won't actually claim the DHCP address, though. You can also leave it running for awhile and it will beep and add a new line of output anytime it sees a DHCP request or offer in the wire - all DHCP packets are broadcast so it doesn't need to make your NIC promiscuous. Output looks like this. Packet sniffing (with the right filter) using Ethereal or some such would accomplish the same goal, but dhcploc is a quick and easy tool you can have a remote user (or customer) run for you without a lot of hand-holding.

You can download dhcploc along with some other Windows diagnostic tools here.

[email protected]